Работа с Google Cloud Platform (compute instance) и Terraform в Unix/Linux

Google Cloud Platrorm — это платформа вида «инфраструктура как сервис» (IaaS), позволяющая клиентам создавать, тестировать и развертывать собственные приложения на инфраструктуре Google, в высокопроизводительных виртуальных машинах.

Google Compute Engine предоставляет виртуальные машины, работающие в инновационных центрах обработки данных Google и всемирной сети.

Установка terraform в Unix/Linux

Установка крайне примитивная и я описал как это можно сделать тут:

Установка terraform в Unix/Linux

Так же, в данной статье, я создал скрипт для автоматической установки данного ПО. Он был протестирован на CentOS 6/7, Debian 8 и на Mac OS X. Все работает должным образом!

Чтобы получить помощь по использованию команд, выполните:

$ terraform --help

Usage: terraform [--version] [--help] <command> [args]

The available commands for execution are listed below.

The most common, useful commands are shown first, followed by

less common or more advanced commands. If you're just getting

started with Terraform, stick with the common commands. For the

other commands, please read the help and docs before usage.

Common commands:

apply Builds or changes infrastructure

console Interactive console for Terraform interpolations

destroy Destroy Terraform-managed infrastructure

env Workspace management

fmt Rewrites config files to canonical format

get Download and install modules for the configuration

graph Create a visual graph of Terraform resources

import Import existing infrastructure into Terraform

init Initialize a Terraform working directory

output Read an output from a state file

plan Generate and show an execution plan

providers Prints a tree of the providers used in the configuration

push Upload this Terraform module to Atlas to run

refresh Update local state file against real resources

show Inspect Terraform state or plan

taint Manually mark a resource for recreation

untaint Manually unmark a resource as tainted

validate Validates the Terraform files

version Prints the Terraform version

workspace Workspace management

All other commands:

debug Debug output management (experimental)

force-unlock Manually unlock the terraform state

state Advanced state management

Приступим к использованию!

Работа с Google Cloud Platform (compute instance) и Terraform в Unix/Linux

Первое что нужно сделать — это настроить «Cloud Identity». С помощью сервиса Google Cloud Identity вы сможете предоставлять доменам, пользователям и аккаунтам в организации доступ к ресурсам Cloud, а также централизованно управлять пользователями и группами через консоль администратора Google.

Полезное чтиво:

Установка Google Cloud SDK/gcloud в Unix/Linux

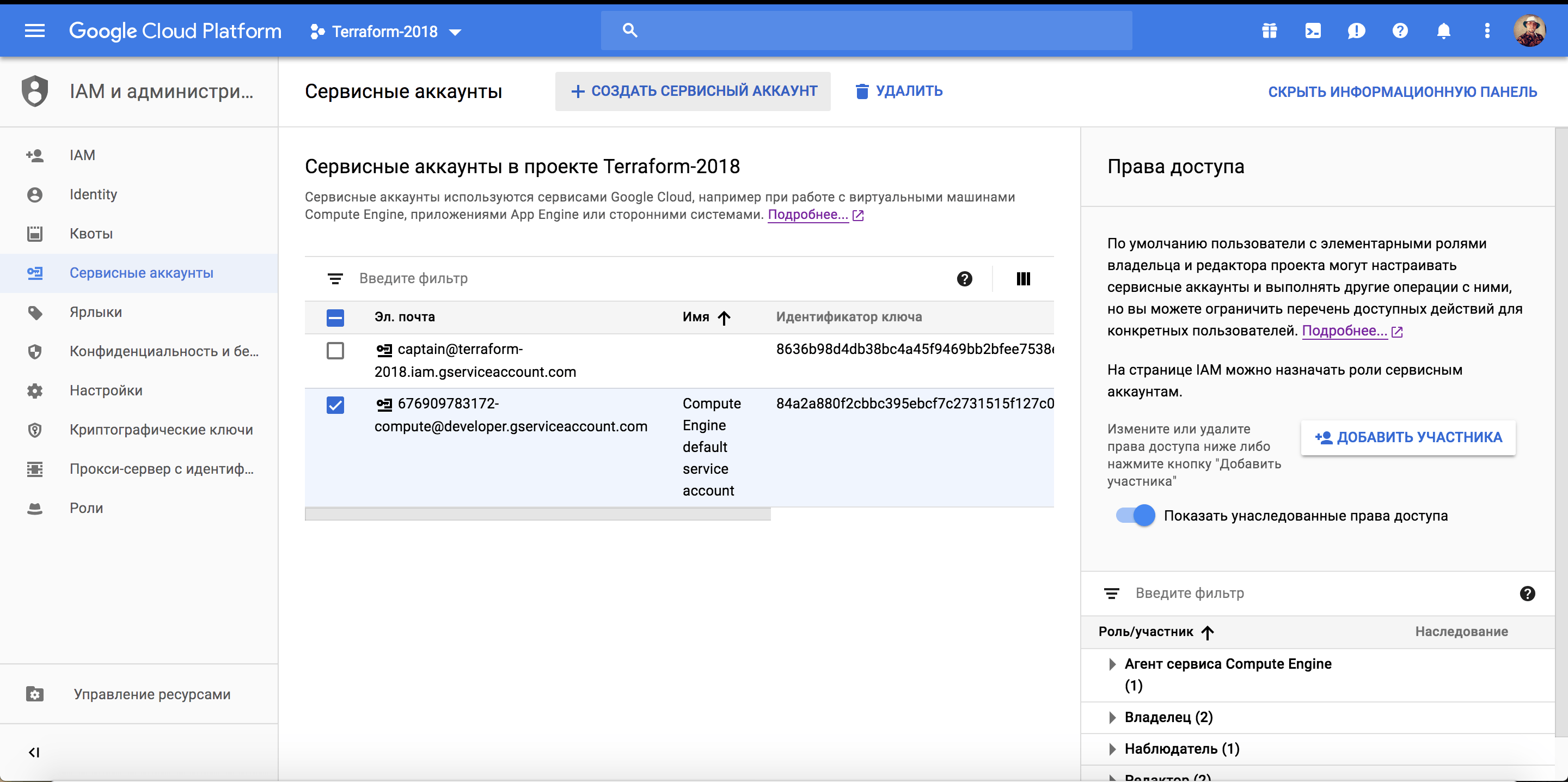

Приведу пример настройки аккаунта, для этого открываем консоль с гуглом и переходим в «IAM и администрирование»->»Сервисные аккаунты»:

IAM и администрирование в Google Cloud

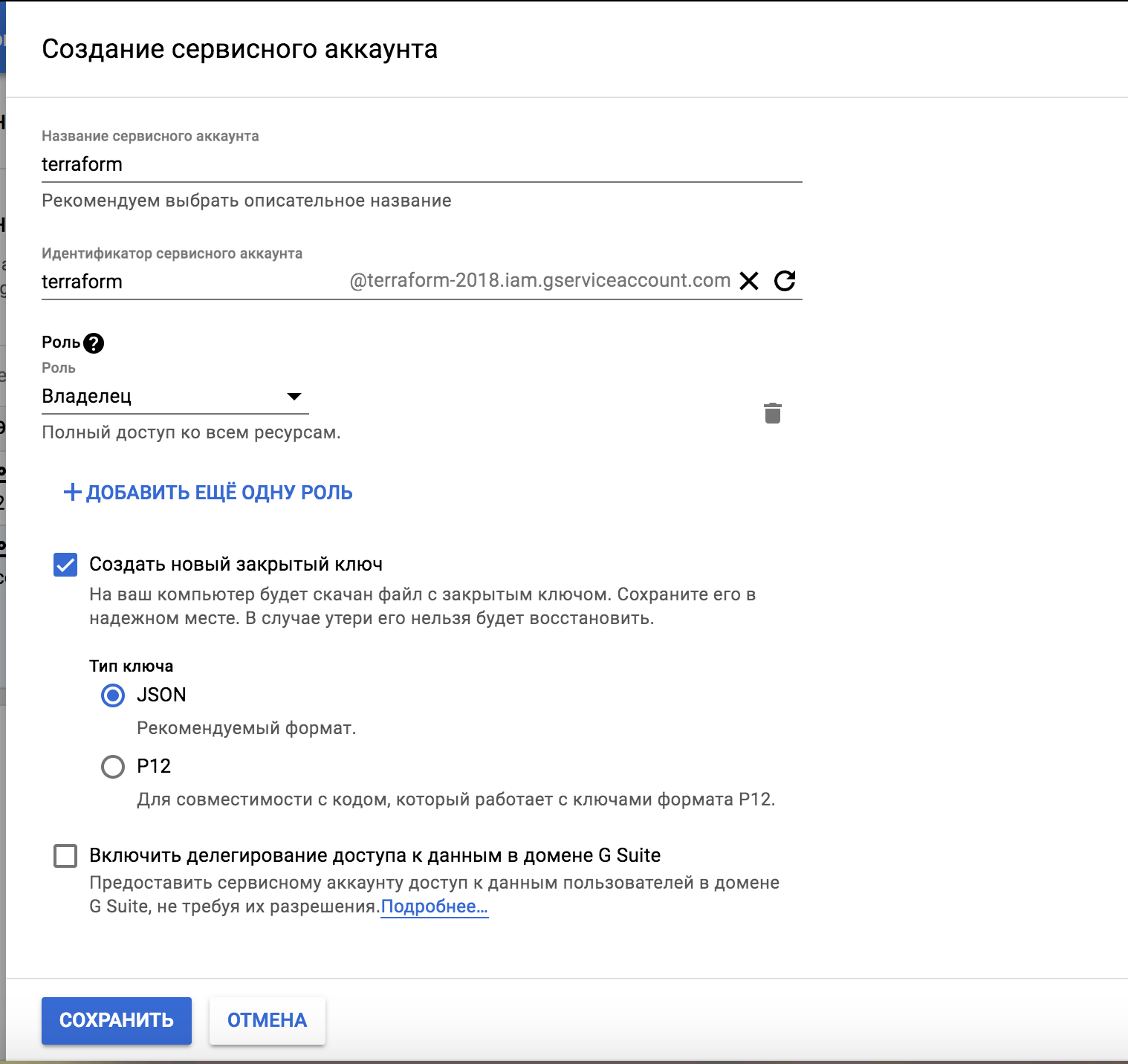

Нажимаем на «Создать сервисный аккаунт»:

Создать сервисный аккаунт в Google Cloud

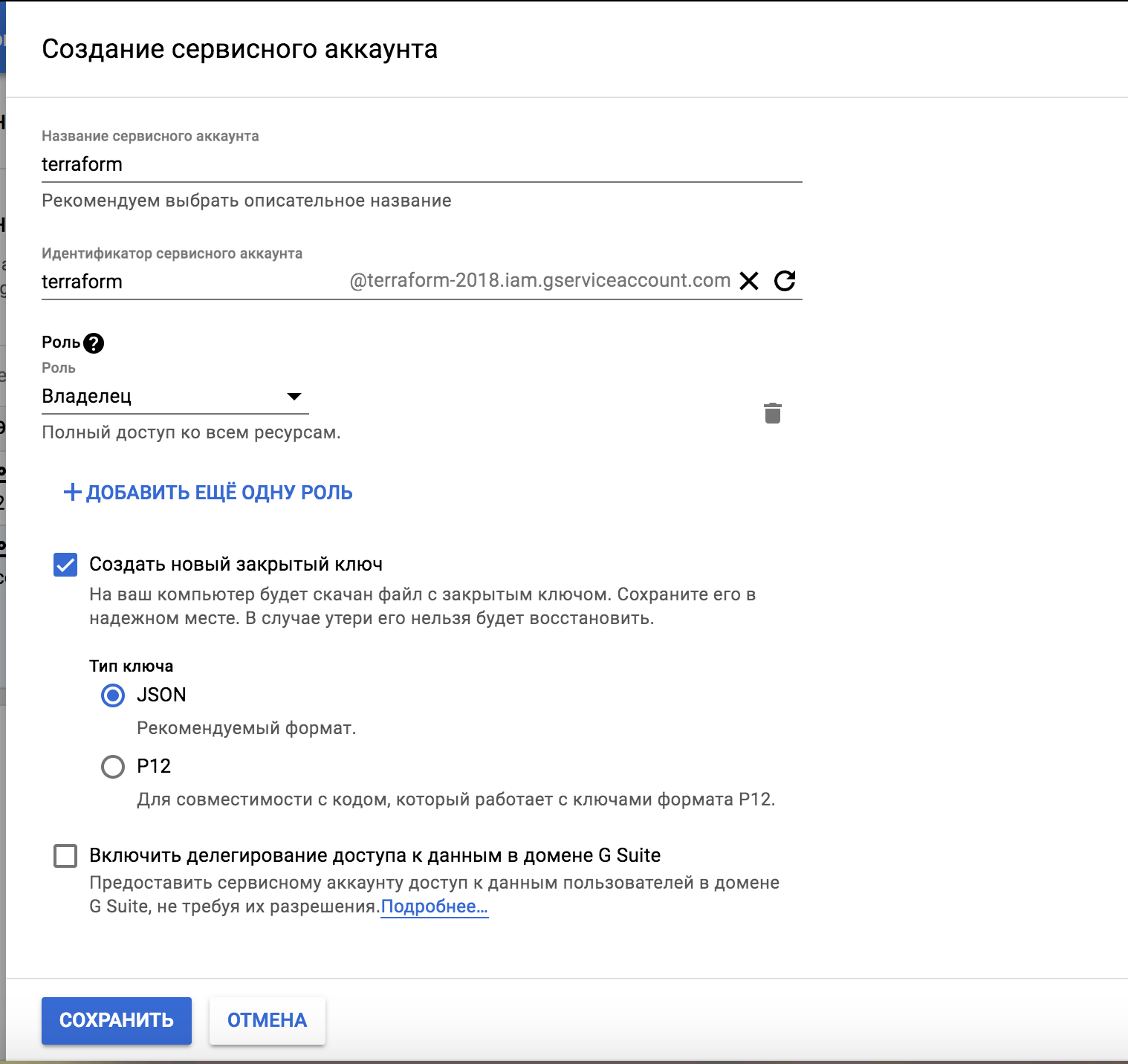

Прописываем необходимые данные и нажимаем на «Сохранить». Создать то создали, но нужно еще и разрешить использование необходимых ресурсов:

Создать сервисный аккаунт в Google Cloud

Выбираем необходимые политики и нажимаем на «Сохранить».

PS: Можно все это дело наклацать в консоле, следующим образом:

$ gcloud iam service-accounts create terraform \ --display-name "Terraform admin account" $ gcloud iam service-accounts keys create /Users/captain/.config/gcloud/creds/terraform_creds.json \ --iam-account terraform@terraform-2018.iam.gserviceaccount.com

Где:

- terraform — Название юзера. Данный юзер будет использоватся для дальнейшего использования.

- /Users/captain/.config/gcloud/creds/terraform_creds.json — Путь куда сохранится файл.

- terraform-2018 — название проекта. Данный проект будет фигурировать и в следующих моих статьях.

Предоставьте разрешение учетной записи службы для просмотра проекта Admin и управления облачным хранилищем:

$ gcloud projects add-iam-policy-binding terraform-2018 \ --member serviceAccount:terraform@terraform-2018.iam.gserviceaccount.com \ --role roles/owner $ gcloud projects add-iam-policy-binding terraform-2018 \ --member serviceAccount:terraform@terraform-2018.iam.gserviceaccount.com \ --role roles/storage.admin

Любые действия, которые выполняються через Terraform, требуют, чтобы API был включен. В этом руководстве Terraform требует следующее:

$ gcloud services enable cloudresourcemanager.googleapis.com $ gcloud services enable cloudbilling.googleapis.com $ gcloud services enable iam.googleapis.com $ gcloud services enable compute.googleapis.com

Я не уверен что это вся политика которая необходима мне для дальнейшей работы. Но будем смотреть по обстоятельствам, у меня не много опыта с Google Cloud, по этому — вникать приходится сразу. Иногда, материал очень устаревший, даже не официальном сайте — это возмутимо!

У меня есть папка terraform, в ней у меня будут лежать провайдеры с которыми я буду работать. Т.к в этом примере я буду использовать google_cloud_platform, то создам данную папку и перейду в нее. Далее, в этой папке, стоит создать:

$ mkdir examples modules

В папке examples, я буду хранить так званые «плейбуки» для разварачивания различных служб, например — zabbix-server, grafana, web-серверы и так далее. В modules директории, я буду хранить все необходимые модули.

Начнем писать модуль, но для этой задачи, я создам папку:

$ mkdir modules/compute_instance

Переходим в нее:

$ cd modules/compute_instance

Открываем файл:

$ vim compute_instance.tf

В данный файл, вставляем:

#---------------------------------------------------

# Create compute instance

#---------------------------------------------------

resource "google_compute_instance" "compute_instance" {

#count = "${var.number_of_instances}"

count = "${var.enable_attached_disk ? 0 : var.number_of_instances}"

project = "${var.project_name}"

name = "${lower(var.name)}-ce-${lower(var.environment)}-${count.index+1}"

zone = "${var.zone}"

machine_type = "${var.machine_type}"

allow_stopping_for_update = "${var.allow_stopping_for_update}"

can_ip_forward = "${var.can_ip_forward}"

timeouts = "${var.timeouts}"

description = "${var.description}"

deletion_protection = "${var.deletion_protection}"

min_cpu_platform = "${var.min_cpu_platform}"

#scratch_disk {

# #interface = "${var.scratch_disk_interface}"

#}

boot_disk {

auto_delete = "${var.boot_disk_auto_delete}"

device_name = "${var.boot_disk_device_name}"

disk_encryption_key_raw = "${var.disk_encryption_key_raw}"

initialize_params {

size = "${var.boot_disk_initialize_params_size}"

type = "${var.boot_disk_initialize_params_type}"

image = "${var.boot_disk_initialize_params_image}"

}

}

#attached_disk {

# source = "testua666"

# device_name = "testua666"

# mode = "READ_WRITE"

# disk_encryption_key_raw = "${var.disk_encryption_key_raw}"

#}

network_interface {

network = "${var.network}"

subnetwork = "${var.subnetwork}"

subnetwork_project = "${var.subnetwork_project}"

address = "${var.address}"

#alias_ip_range {

# ip_cidr_range = "10.138.0.0/20"

# subnetwork_range_name = ""

#}

access_config {

nat_ip = "${var.nat_ip}"

public_ptr_domain_name = "${var.public_ptr_domain_name}"

network_tier = "${var.network_tier}"

}

}

labels {

name = "${lower(var.name)}-ce-${lower(var.environment)}-${count.index+1}"

environment = "${lower(var.environment)}"

orchestration = "${lower(var.orchestration)}"

}

metadata {

ssh-keys = "${var.ssh_user}:${file("${var.public_key_path}")}"

#shutdown-script = "${file("${path.module}/scripts/shutdown.sh")}"

}

metadata_startup_script = "${file("${path.module}/${var.install_script_src_path}")}"

#

#metadata_startup_script = "echo hi > /test.txt"

#metadata_startup_script = "${file("startup.sh")}"

#metadata_startup_script = <<SCRIPT

# ${file("${path.module}/scripts/install.sh")}

#SCRIPT

#provisioner "file" {

# source = "${var.install_script_src_path}"

# destination = "${var.install_script_dest_path}"

#

# connection {

# type = "ssh"

# user = "${var.ssh_user}"

# port = "${var.ssh_port}"

# private_key = "${file("${var.private_key_path}")}"

# agent = false

# timeout = "5m"

# agent_identity = false

# insecure = true

# }

#}

#provisioner "remote-exec" {

# connection {

# type = "ssh"

# user = "${var.ssh_user}"

# port = "${var.ssh_port}"

# private_key = "${file("${var.private_key_path}")}"

# agent = false

# timeout = "5m"

# }

#

# inline = [

# "chmod +x ${var.install_script_dest_path}",

# "sudo ${var.install_script_dest_path} ${count.index}",

# ]

#}

#

#

tags = [

"${lower(var.name)}",

"${lower(var.environment)}",

"${lower(var.orchestration)}"

]

service_account {

email = "${var.service_account_email}"

scopes = "${var.service_account_scopes}"

}

scheduling {

preemptible = "${var.scheduling_preemptible}"

on_host_maintenance = "${var.scheduling_on_host_maintenance}"

automatic_restart = "${var.scheduling_automatic_restart}"

}

#Note: GPU accelerators can only be used with on_host_maintenance option set to TERMINATE.

guest_accelerator {

type = "${var.guest_accelerator_type}"

count = "${var.guest_accelerator_count}"

}

lifecycle {

ignore_changes = [

"network_interface",

]

create_before_destroy = true

}

}

#---------------------------------------------------

# Create compute instance with attached disk

#---------------------------------------------------

resource "google_compute_instance" "compute_instance_with_attached_disk" {

count = "${var.enable_attached_disk && length(var.attached_disk_source) > 0 ? var.number_of_instances : 0}"

project = "${var.project_name}"

name = "${lower(var.name)}-ce-${lower(var.environment)}-${count.index+1}"

zone = "${var.zone}"

machine_type = "${var.machine_type}"

allow_stopping_for_update = "${var.allow_stopping_for_update}"

can_ip_forward = "${var.can_ip_forward}"

timeouts = "${var.timeouts}"

description = "${var.description}"

deletion_protection = "${var.deletion_protection}"

min_cpu_platform = "${var.min_cpu_platform}"

#scratch_disk {

# #interface = "${var.scratch_disk_interface}"

#}

boot_disk {

auto_delete = "${var.boot_disk_auto_delete}"

device_name = "${var.boot_disk_device_name}"

disk_encryption_key_raw = "${var.disk_encryption_key_raw}"

initialize_params {

size = "${var.boot_disk_initialize_params_size}"

type = "${var.boot_disk_initialize_params_type}"

image = "${var.boot_disk_initialize_params_image}"

}

}

attached_disk {

source = "${var.attached_disk_source}"

device_name = "${var.attached_disk_device_name}"

mode = "${var.attached_disk_mode}"

disk_encryption_key_raw = "${var.disk_encryption_key_raw}"

}

network_interface {

network = "${var.network}"

subnetwork = "${var.subnetwork}"

subnetwork_project = "${var.subnetwork_project}"

address = "${var.address}"

#alias_ip_range {

# ip_cidr_range = "10.138.0.0/20"

# subnetwork_range_name = ""

#}

access_config {

nat_ip = "${var.nat_ip}"

public_ptr_domain_name = "${var.public_ptr_domain_name}"

network_tier = "${var.network_tier}"

}

}

labels {

name = "${lower(var.name)}-ce-${lower(var.environment)}-${count.index+1}"

environment = "${lower(var.environment)}"

orchestration = "${lower(var.orchestration)}"

}

metadata {

ssh-keys = "${var.ssh_user}:${file("${var.public_key_path}")}"

#shutdown-script = "${file("${path.module}/scripts/shutdown.sh")}"

}

metadata_startup_script = "${file("${path.module}/${var.install_script_src_path}")}"

#

#metadata_startup_script = "echo hi > /test.txt"

#metadata_startup_script = "${file("startup.sh")}"

#metadata_startup_script = <<SCRIPT

# ${file("${path.module}/scripts/install.sh")}

#SCRIPT

#provisioner "file" {

# source = "${var.install_script_src_path}"

# destination = "${var.install_script_dest_path}"

#

# connection {

# type = "ssh"

# user = "${var.ssh_user}"

# port = "${var.ssh_port}"

# private_key = "${file("${var.private_key_path}")}"

# agent = false

# timeout = "5m"

# agent_identity = false

# insecure = true

# }

#}

#provisioner "remote-exec" {

# connection {

# type = "ssh"

# user = "${var.ssh_user}"

# port = "${var.ssh_port}"

# private_key = "${file("${var.private_key_path}")}"

# agent = false

# timeout = "5m"

# }

#

# inline = [

# "chmod +x ${var.install_script_dest_path}",

# "sudo ${var.install_script_dest_path} ${count.index}",

# ]

#}

#

#

tags = [

"${lower(var.name)}",

"${lower(var.environment)}",

"${lower(var.orchestration)}"

]

service_account {

email = "${var.service_account_email}"

scopes = "${var.service_account_scopes}"

}

scheduling {

preemptible = "${var.scheduling_preemptible}"

on_host_maintenance = "${var.scheduling_on_host_maintenance}"

automatic_restart = "${var.scheduling_automatic_restart}"

}

#Note: GPU accelerators can only be used with on_host_maintenance option set to TERMINATE.

guest_accelerator {

type = "${var.guest_accelerator_type}"

count = "${var.guest_accelerator_count}"

}

lifecycle {

ignore_changes = [

"network_interface",

]

create_before_destroy = true

}

}

Открываем файл:

$ vim variables.tf

И прописываем:

variable "name" {

description = "A unique name for the resource, required by GCE. Changing this forces a new resource to be created."

default = "TEST"

}

variable "zone" {

description = "The zone that the machine should be created in"

default = "us-east1-b"

}

variable "environment" {

description = "Environment for service"

default = "STAGE"

}

variable "orchestration" {

description = "Type of orchestration"

default = "Terraform"

}

variable "createdby" {

description = "Created by"

default = "Vitaliy Natarov"

}

variable "project_name" {

description = "The ID of the project in which the resource belongs. If it is not provided, the provider project is used."

default = ""

}

variable "machine_type" {

description = "The machine type to create"

default = "f1-micro"

}

variable "allow_stopping_for_update" {

description = "If true, allows Terraform to stop the instance to update its properties. If you try to update a property that requires stopping the instance without setting this field, the update will fail"

default = true

}

variable "can_ip_forward" {

description = "Whether to allow sending and receiving of packets with non-matching source or destination IPs. This defaults to false."

default = false

}

variable "timeouts" {

description = "Configurable timeout in minutes for creating instances. Default is 4 minutes. Changing this forces a new resource to be created."

default = 4

}

variable "description" {

description = "A brief description of this resource."

default = ""

}

variable "deletion_protection" {

description = "Enable deletion protection on this instance. Defaults to false. Note: you must disable deletion protection before removing the resource (e.g., via terraform destroy), or the instance cannot be deleted and the Terraform run will not complete successfully."

default = false

}

variable "min_cpu_platform" {

description = "Specifies a minimum CPU platform for the VM instance. Applicable values are the friendly names of CPU platforms, such as Intel Haswell or Intel Skylake. Note: allow_stopping_for_update must be set to true in order to update this field."

default = "Intel Haswell"

}

variable "boot_disk_auto_delete" {

description = "Whether the disk will be auto-deleted when the instance is deleted. Defaults to true."

default = true

}

variable "boot_disk_device_name" {

description = "Name with which attached disk will be accessible under /dev/disk/by-id/"

default = ""

}

variable "disk_encryption_key_raw" {

description = "A 256-bit customer-supplied encryption key, encoded in RFC 4648 base64 to encrypt this disk."

default = ""

}

variable "boot_disk_initialize_params_size" {

description = "The size of the image in gigabytes. If not specified, it will inherit the size of its base image."

default = "10"

}

variable "boot_disk_initialize_params_type" {

description = "The GCE disk type. May be set to pd-standard or pd-ssd."

default = "pd-standard"

}

variable "boot_disk_initialize_params_image" {

description = "The image from which to initialize this disk. This can be one of: the image's self_link, projects/{project}/global/images/{image}, projects/{project}/global/images/family/{family}, global/images/{image}, global/images/family/{family}, family/{family}, {project}/{family}, {project}/{image}, {family}, or {image}. If referred by family, the images names must include the family name. If they don't, use the google_compute_image data source. For instance, the image centos-6-v20180104 includes its family name centos-6. These images can be referred by family name here."

default = "centos-7"

}

variable "number_of_instances" {

description = "Number of instances to make"

default = "1"

}

#variable "scratch_disk_interface" {

# description = "The disk interface to use for attaching this disk; either SCSI or NVME. Defaults to SCSI."

# default = "SCSI"

#}

variable "network" {

description = "The name or self_link of the network to attach this interface to. Either network or subnetwork must be provided."

default = "default"

}

variable "subnetwork" {

description = "The name or self_link of the subnetwork to attach this interface to. The subnetwork must exist in the same region this instance will be created in. Either network or subnetwork must be provided."

default = ""

}

variable "subnetwork_project" {

description = "The project in which the subnetwork belongs. If the subnetwork is a self_link, this field is ignored in favor of the project defined in the subnetwork self_link. If the subnetwork is a name and this field is not provided, the provider project is used."

default = ""

}

variable "address" {

description = "The private IP address to assign to the instance. If empty, the address will be automatically assigned."

default = ""

}

variable "nat_ip" {

description = "The IP address that will be 1:1 mapped to the instance's network ip. If not given, one will be generated."

default = ""

}

variable "public_ptr_domain_name" {

description = "The DNS domain name for the public PTR record. To set this field on an instance, you must be verified as the owner of the domain."

default = ""

}

variable "network_tier" {

description = "The networking tier used for configuring this instance. This field can take the following values: PREMIUM or STANDARD. If this field is not specified, it is assumed to be PREMIUM."

default = "PREMIUM"

}

variable "service_account_email" {

description = "The service account e-mail address. If not given, the default Google Compute Engine service account is used. Note: allow_stopping_for_update must be set to true in order to update this field."

default = ""

}

variable "service_account_scopes" {

description = "A list of service scopes. Both OAuth2 URLs and gcloud short names are supported. To allow full access to all Cloud APIs, use the cloud-platform scope. Note: allow_stopping_for_update must be set to true in order to update this field."

default = []

}

variable "scheduling_preemptible" {

description = "Is the instance preemptible."

default = "false"

}

variable "scheduling_on_host_maintenance" {

description = "Describes maintenance behavior for the instance. Can be MIGRATE or TERMINATE"

default = "TERMINATE"

}

variable "scheduling_automatic_restart" {

description = "Specifies if the instance should be restarted if it was terminated by Compute Engine (not a user)."

default = "true"

}

variable "guest_accelerator_type" {

description = "The accelerator type resource to expose to this instance. E.g. nvidia-tesla-k80."

default = ""

}

variable "guest_accelerator_count" {

description = "The number of the guest accelerator cards exposed to this instance."

default = "0"

}

variable "ssh_user" {

description = "User for connection to google machine"

default = "captain"

}

variable "ssh_port" {

description = "Port for connection to google machine"

default = "22"

}

variable "public_key_path" {

description = "Path to file containing public key"

default = "~/.ssh/gcloud_id_rsa.pub"

}

variable "private_key_path" {

description = "Path to file containing private key"

default = "~/.ssh/gcloud_id_rsa"

}

variable "install_script_src_path" {

description = "Path to install script within this repository"

default = "scripts/install.sh"

}

variable "install_script_dest_path" {

description = "Path to put the install script on each destination resource"

default = "/tmp/install.sh"

}

variable "enable_attached_disk" {

description = "Enable attaching disk to node"

default = "false"

}

variable "attached_disk_source" {

description = "The name or self_link of the disk to attach to this instance."

default = ""

}

variable "attached_disk_device_name" {

description = "Name with which the attached disk will be accessible under /dev/disk/by-id/"

default = ""

}

variable "attached_disk_mode" {

description = "Either 'READ_ONLY' or 'READ_WRITE', defaults to 'READ_WRITE' If you have a persistent disk with data that you want to share between multiple instances, detach it from any read-write instances and attach it to one or more instances in read-only mode."

default = "READ_WRITE"

}

Собственно в этом файле храняться все переменные. Спасибо кэп!

Открываем последний файл:

$ vim outputs.tf

И в него вставить нужно следующие строки:

########################################################################

# Compute instance

########################################################################

output "compute_instance_ids" {

description = "The server-assigned unique identifier of this instance."

value = "${google_compute_instance.compute_instance.*.instance_id}"

}

output "compute_instance_metadata_fingerprints" {

description = "The unique fingerprint of the metadata."

value = "${google_compute_instance.compute_instance.*.metadata_fingerprint}"

}

output "compute_instance_self_links" {

description = "output the URI of the created resource."

value = "${google_compute_instance.compute_instance.*.self_link}"

}

output "compute_instance_tags_fingerprints" {

description = "The unique fingerprint of the tags."

value = "${google_compute_instance.compute_instance.*.tags_fingerprint}"

}

output "compute_instance_label_fingerprints" {

description = "The unique fingerprint of the labels."

value = "${google_compute_instance.compute_instance.*.label_fingerprint}"

}

output "compute_instance_cpu_platforms" {

description = "The CPU platform used by this instance."

value = "${google_compute_instance.compute_instance.*.cpu_platform}"

}

output "compute_instance_internal_ip_addresses" {

description = "The internal ip address of the instance, either manually or dynamically assigned."

value = "${google_compute_instance.compute_instance.*.network_interface.0.address}"

}

output "compute_instance_external_ip_addresses" {

description = "If the instance has an access config, either the given external ip (in the nat_ip field) or the ephemeral (generated) ip (if you didn't provide one)."

value = "${google_compute_instance.compute_instance.*.network_interface.0.access_config.0.assigned_nat_ip}"

}

########################################################################

# Compute instance with attached disk

########################################################################

output "compute_instance_with_attached_disk_ids" {

description = "The server-assigned unique identifier of this instance."

value = "${google_compute_instance.compute_instance_with_attached_disk.*.instance_id}"

}

output "compute_instance_with_attached_disk_metadata_fingerprints" {

description = "The unique fingerprint of the metadata."

value = "${google_compute_instance.compute_instance_with_attached_disk.*.metadata_fingerprint}"

}

output "compute_instance_with_attached_disk_self_links" {

description = "output the URI of the created resource."

value = "${google_compute_instance.compute_instance_with_attached_disk.*.self_link}"

}

output "compute_instance_with_attached_disk_tags_fingerprints" {

description = "The unique fingerprint of the tags."

value = "${google_compute_instance.compute_instance_with_attached_disk.*.tags_fingerprint}"

}

output "compute_instance_with_attached_disk_label_fingerprints" {

description = "The unique fingerprint of the labels."

value = "${google_compute_instance.compute_instance_with_attached_disk.*.label_fingerprint}"

}

output "compute_instance_with_attached_disk_cpu_platforms" {

description = "The CPU platform used by this instance."

value = "${google_compute_instance.compute_instance_with_attached_disk.*.cpu_platform}"

}

output "compute_instance_with_attached_disk_internal_ip_addresses" {

description = "The internal ip address of the instance, either manually or dynamically assigned."

value = "${google_compute_instance.compute_instance_with_attached_disk.*.network_interface.0.address}"

}

output "compute_instance_with_attached_disk_external_ip_addresses" {

description = "If the instance has an access config, either the given external ip (in the nat_ip field) or the ephemeral (generated) ip (if you didn't provide one)."

value = "${google_compute_instance.compute_instance_with_attached_disk.*.network_interface.0.access_config.0.assigned_nat_ip}"

}

Внутри каталога с модулем, создаем папку `scripts`:

$ mkdir scripts

Создаем файл (баш-скрипт):

$ vim scripts/install.sh

Вставляем следующий текст (как пример — простые действия):

#!/bin/bash -x # CREATED: # vitaliy.natarov@yahoo.com # # Unix/Linux blog: # http://linux-notes.org # Vitaliy Natarov # sudo yum update -y sudo yum upgrade -y sudo yum install epel-release -y sudo yum install nginx -y sudo service nginx restart

Переходим теперь в папку google_cloud_platform/examples и создадим еще одну папку для проверки написанного чуда:

$ mkdir compute && cd $_

Внутри созданной папки открываем файл:

$ vim main.tf

И вставим в него следующий код:

#

# MAINTAINER Vitaliy Natarov "vitaliy.natarov@yahoo.com"

#

terraform {

required_version = "> 0.9.0"

}

provider "google" {

#credentials = "${file("/Users/captain/.config/gcloud/creds/captain_creds.json")}"

credentials = "${file("/Users/captain/.config/gcloud/creds/terraform_creds.json")}"

project = "terraform-2018"

region = "us-east-1"

}

module "compute_instance" {

source = "../../modules/compute_instance"

name = "TEST"

project_name = "terraform-2018"

number_of_instances = "2"

service_account_scopes = ["userinfo-email", "compute-ro", "storage-ro"]

enable_attached_disk = false

attached_disk_source = "test-disk-1"

}

Все уже написано и готово к использованию. Ну что, начнем тестирование. В папке с вашим плейбуком, выполняем:

$ terraform init

Этим действием я инициализирую проект. Затем, подтягиваю модуль:

$ terraform get

PS: Для обновление изменений в самом модуле, можно выполнять:

$ terraform get -update

Проверим валидацию:

$ terraform validate

Запускем прогон:

$ terraform plan

Мне вывело что все у меня хорошо и можно запускать деплой:

$ terraform apply

Как видно с вывода, — все прошло гладко! Чтобы удалить созданное творение, можно выполнить:

$ terraform destroy

Весь материал аплоаджу в github аккаунт для удобства использования:

$ git clone https://github.com/SebastianUA/terraform.git

Вот и все на этом. Данная статья «Работа с Google Cloud Platform (compute instance) и Terraform в Unix/Linux» завершена.