Работа с AWS CloudWatch и Terraform в Unix/Linux

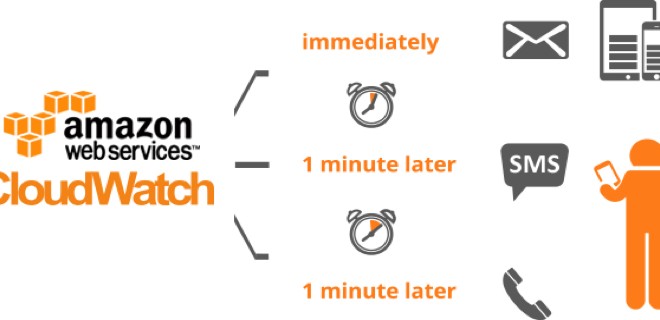

Amazon CloudWatch – это сервис мониторинга облачных ресурсов AWS и приложений, которые вы запускаете с их помощью. Amazon CloudWatch можно использовать для сбора и отслеживания метрик, накопления и анализа файлов журналов, создания предупреждений, а также автоматического реагирования на изменения ресурсов AWS. Amazon CloudWatch может использоваться для мониторинга следующих ресурсов AWS: инстансов Amazon EC2, таблиц Amazon DynamoDB, инстансов Amazon RDS DB, а также для мониторинга пользовательских метрик приложений и сервисов и любых логов ваших приложений. Можно использовать Amazon CloudWatch для получения сводной информации о системе, включающей в себя информацию об используемых ресурсах, производительности приложений и общем состоянии системы. Эти данные применяются для оперативного реагирования и обеспечения стабильной работы приложений.

Установка terraform в Unix/Linux

Установка крайне примитивная и я описал как это можно сделать тут:

Установка terraform в Unix/Linux

Так же, в данной статье, я создал скрипт для автоматической установки данного ПО. Он был протестирован на CentOS 6/7, Debian 8 и на Mac OS X. Все работает должным образом!

Чтобы получить помощь по использованию команд, выполните:

$ terraform

Usage: terraform [--version] [--help] <command> [args]

The available commands for execution are listed below.

The most common, useful commands are shown first, followed by

less common or more advanced commands. If you're just getting

started with Terraform, stick with the common commands. For the

other commands, please read the help and docs before usage.

Common commands:

apply Builds or changes infrastructure

console Interactive console for Terraform interpolations

destroy Destroy Terraform-managed infrastructure

env Workspace management

fmt Rewrites config files to canonical format

get Download and install modules for the configuration

graph Create a visual graph of Terraform resources

import Import existing infrastructure into Terraform

init Initialize a Terraform working directory

output Read an output from a state file

plan Generate and show an execution plan

providers Prints a tree of the providers used in the configuration

push Upload this Terraform module to Atlas to run

refresh Update local state file against real resources

show Inspect Terraform state or plan

taint Manually mark a resource for recreation

untaint Manually unmark a resource as tainted

validate Validates the Terraform files

version Prints the Terraform version

workspace Workspace management

All other commands:

debug Debug output management (experimental)

force-unlock Manually unlock the terraform state

state Advanced state management

Приступим к использованию!

Работа с AWS CloudWatch и Terraform в Unix/Linux

У меня есть папка terraform, в ней у меня будут лежать провайдеры с которыми я буду работать. Т.к в этом примере я буду использовать AWS, то создам данную папку и перейду в нее. Далее, в этой папке, стоит создать:

$ mkdir examples modules

В папке examples, я буду хранить так званые «плейбуки» для разварачивания различных служб, например — zabbix-server, grafana, web-серверы и так далее. В modules директории, я буду хранить все необходимые модули.

Начнем писать модуль, но для этой задачи, я создам папку:

$ mkdir modules/cloudwatch

Переходим в нее:

$ cd modules/cloudwatch

Открываем файл:

$ vim cloudwatch.tf

В данный файл, вставляем:

#---------------------------------------------------

# Create AWS CloudWatch metric alarm

#---------------------------------------------------

resource "aws_cloudwatch_metric_alarm" "cw_metric_alarm" {

count = "${var.alarm_name != "" ? 1 : 0}"

alarm_name = "${var.alarm_name}"

comparison_operator = "${var.comparison_operator}"

evaluation_periods = "${var.evaluation_periods}"

metric_name = "${var.metric_name}"

namespace = "${var.namespace}"

period = "${var.period}"

threshold = "${var.threshold}"

datapoints_to_alarm = "${var.datapoints_to_alarm}"

actions_enabled = "${var.actions_enabled}"

dimensions = "${var.dimensions}"

alarm_description = "${var.alarm_description}"

alarm_actions = ["${var.alarm_actions}"]

insufficient_data_actions = "${var.insufficient_data_actions}"

ok_actions = "${var.ok_actions}"

#extended_statistic = "${var.extended_statistic}"

#evaluate_low_sample_count_percentiles = "${var.evaluate_low_sample_count_percentiles}"

statistic = "${var.statistic}"

treat_missing_data = "${var.treat_missing_data}"

}

#---------------------------------------------------

# Create AWS CloudWatch event permission

#---------------------------------------------------

resource "aws_cloudwatch_event_permission" "cw_event_permission" {

count = "${var.principal_for_event_permission != "" ? 1 : 0}"

principal = "${var.principal_for_event_permission}"

statement_id = "${var.statement_id_for_event_permission}"

action = "${var.action_for_event_permission}"

}

#---------------------------------------------------

# Create AWS CloudWatch event rule

#---------------------------------------------------

resource "aws_cloudwatch_event_rule" "cw_event_rule" {

count = "${var.arn_for_cloudwatch_event_target != "" ? 1 : 0}"

name = "capture-aws-sign-in"

description = "Capture each AWS Console Sign In"

event_pattern = <<PATTERN

{

"detail-type": [

"AWS Console Sign In via CloudTrail"

]

}

PATTERN

is_enabled = "${var.is_enabled_for_event_rule}"

}

resource "aws_cloudwatch_event_target" "cloudwatch_event_target" {

count = "${var.arn_for_cloudwatch_event_target != "" ? 1 : 0}"

rule = "${aws_cloudwatch_event_rule.cw_event_rule.name}"

target_id = "${var.target_id_for_cloudwatch_event_target}"

arn = "${var.arn_for_cloudwatch_event_target}"

}

#---------------------------------------------------

# Create AWS CloudWatch Create AWS dashboard

#---------------------------------------------------

resource "aws_cloudwatch_dashboard" "cloudwatch_dashboard" {

count = "${var.cloudwatch_dashboard_name !="" ? 1 : 0}"

dashboard_name = "${var.cloudwatch_dashboard_name}"

dashboard_body = <<EOF

{

"widgets": [

{

"type":"metric",

"x":0,

"y":0,

"width":12,

"height":6,

"properties":{

"metrics":[

[

"AWS/EC2",

"CPUUtilization",

"InstanceId",

"i-012345"

]

],

"period":300,

"stat":"Average",

"region":"us-east-1",

"title":"EC2 Instance CPU"

}

},

{

"type":"text",

"x":0,

"y":7,

"width":3,

"height":3,

"properties":{

"markdown":"Hello world"

}

}

]

}

EOF

}

#---------------------------------------------------

# Create AWS CloudWatch LOG group

#---------------------------------------------------

resource "aws_cloudwatch_log_group" "cw_log_group" {

count = "${var.name_for_cloudwatch_log_group !="" ? 1 : 0}"

name = "${var.name_for_cloudwatch_log_group}"

retention_in_days = "${var.retention_in_days_for_cloudwatch_log_group}"

kms_key_id = "${var.kms_key_id_for_cloudwatch_log_group}"

tags {

Name = "${var.name}-cw-log-group-${var.environment}"

Environment = "${var.environment}"

Orchestration = "${var.orchestration}"

Createdby = "${var.createdby}"

}

}

#---------------------------------------------------

# Create AWS CloudWatch LOG metric filter

#---------------------------------------------------

resource "aws_cloudwatch_log_metric_filter" "cloudwatch_log_metric_filter" {

count = "${var.name_for_cloudwatch_log_group !="" ? 1 : 0}"

name = "${var.name_for_cloudwatch_log_metric_filter}"

pattern = "${var.pattern_for_cloudwatch_log_metric_filter}"

log_group_name = "${aws_cloudwatch_log_group.cw_log_group.name}"

metric_transformation {

name = "${var.name_for_metric_transformation}"

namespace = "${var.namespace_for_metric_transformation}"

value = "${var.value_for_metric_transformation}"

}

}

#---------------------------------------------------

# Create AWS CloudWatch LOG stream

#---------------------------------------------------

resource "aws_cloudwatch_log_stream" "cloudwatch_log_stream" {

count = "${var.name_for_cloudwatch_log_stream !="" ? 1 : 0}"

name = "${var.name_for_cloudwatch_log_stream}"

log_group_name = "${aws_cloudwatch_log_group.cw_log_group.name}"

}

Открываем файл:

$ vim variables.tf

И прописываем:

#-----------------------------------------------------------

# Global or/and default variables

#-----------------------------------------------------------

variable "name" {

description = "Name to be used on all resources as prefix"

default = "TEST-RDS"

}

variable "region" {

description = "The region where to deploy this code (e.g. us-east-1)."

default = "us-east-1"

}

variable "environment" {

description = "Environment for service"

default = "STAGE"

}

variable "orchestration" {

description = "Type of orchestration"

default = "Terraform"

}

variable "createdby" {

description = "Created by"

default = "Vitaliy Natarov"

}

variable "alarm_name" {

description = "The descriptive name for the alarm. This name must be unique within the user's AWS account"

default = ""

}

variable "comparison_operator" {

description = "The arithmetic operation to use when comparing the specified Statistic and Threshold. The specified Statistic value is used as the first operand. Either of the following is supported: GreaterThanOrEqualToThreshold, GreaterThanThreshold, LessThanThreshold, LessThanOrEqualToThreshold."

default = "GreaterThanOrEqualToThreshold"

}

variable "evaluation_periods" {

description = "The number of periods over which data is compared to the specified threshold."

default = "2"

}

variable "metric_name" {

description = "The name for the alarm's associated metric (ex: CPUUtilization)"

default = "CPUUtilization"

}

variable "namespace" {

description = "The namespace for the alarm's associated metric (ex: AWS/EC2)"

default = "AWS/EC2"

}

variable "period" {

description = "The period in seconds over which the specified statistic is applied."

default = "120"

}

variable "statistic" {

description = "The statistic to apply to the alarm's associated metric. Either of the following is supported: SampleCount, Average, Sum, Minimum, Maximum"

default = "Average"

}

variable "threshold" {

description = "The value against which the specified statistic is compared"

default = "80"

}

variable "actions_enabled" {

description = "Indicates whether or not actions should be executed during any changes to the alarm's state. Defaults to true."

default = "true"

}

variable "alarm_actions" {

description = "The list of actions to execute when this alarm transitions into an ALARM state from any other state. Each action is specified as an Amazon Resource Number (ARN)."

type = "list"

default = []

}

variable "alarm_description" {

description = "The description for the alarm."

default = ""

}

variable "datapoints_to_alarm" {

description = "The number of datapoints that must be breaching to trigger the alarm."

default = "0"

}

variable "dimensions" {

description = "List of the dimensions for the alarm's associated metric"

type = "list"

default = []

}

variable "insufficient_data_actions" {

description = "The list of actions to execute when this alarm transitions into an INSUFFICIENT_DATA state from any other state. Each action is specified as an Amazon Resource Number (ARN)."

type = "list"

default = []

}

variable "ok_actions" {

description = "The list of actions to execute when this alarm transitions into an OK state from any other state. Each action is specified as an Amazon Resource Number (ARN)."

type = "list"

default = []

}

variable "unit" {

description = "The unit for the alarm's associated metric."

default = ""

}

variable "extended_statistic" {

description = "The percentile statistic for the metric associated with the alarm. Specify a value between p0.0 and p100."

default = "p100"

}

variable "treat_missing_data" {

description = "Sets how this alarm is to handle missing data points. The following values are supported: missing, ignore, breaching and notBreaching. Defaults to missing."

default = "missing"

}

variable "evaluate_low_sample_count_percentiles" {

description = "Used only for alarms based on percentiles. If you specify ignore, the alarm state will not change during periods with too few data points to be statistically significant. If you specify evaluate or omit this parameter, the alarm will always be evaluated and possibly change state no matter how many data points are available. The following values are supported: ignore, and evaluate."

default = "ignore"

}

variable "principal_for_event_permission" {

description = "The 12-digit AWS account ID that you are permitting to put events to your default event bus. Specify * to permit any account to put events to your default event bus."

default = ""

}

variable "statement_id_for_event_permission" {

description = "An identifier string for the external account that you are granting permissions to."

default = "DevAccountAccess"

}

variable "action_for_event_permission" {

description = "The action that you are enabling the other account to perform. Defaults to events:PutEvents."

default = "events:PutEvents"

}

variable "is_enabled_for_event_rule" {

description = "Whether the rule should be enabled (defaults to true)."

default = "true"

}

variable "arn_for_cloudwatch_event_target" {

description = "The Amazon Resource Name (ARN) of the rule."

default = ""

}

variable "target_id_for_cloudwatch_event_target" {

description = "target ID"

default = "SendToSNS"

}

variable "cloudwatch_dashboard_name" {

description = "The name of the dashboard."

default = ""

}

variable "name_for_cloudwatch_log_group" {

description = "The name of the log group. If omitted, Terraform will assign a random, unique name."

default = ""

}

variable "retention_in_days_for_cloudwatch_log_group" {

description = "Specifies the number of days you want to retain log events in the specified log group."

default = "0"

}

variable "kms_key_id_for_cloudwatch_log_group" {

description = "The ARN of the KMS Key to use when encrypting log data. Please note, after the AWS KMS CMK is disassociated from the log group, AWS CloudWatch Logs stops encrypting newly ingested data for the log group. All previously ingested data remains encrypted, and AWS CloudWatch Logs requires permissions for the CMK whenever the encrypted data is requested."

default = ""

}

variable "name_for_cloudwatch_log_metric_filter" {

description = "A name for the metric filter."

default = "metric-filter"

}

variable "pattern_for_cloudwatch_log_metric_filter" {

description = "A valid CloudWatch Logs filter pattern for extracting metric data out of ingested log events."

default = ""

}

variable "name_for_metric_transformation" {

description = "The name of the CloudWatch metric to which the monitored log information should be published (e.g. ErrorCount)"

default = "ErrorCount"

}

variable "namespace_for_metric_transformation" {

description = "The destination namespace of the CloudWatch metric."

default = "NameSpace"

}

variable "value_for_metric_transformation" {

description = "What to publish to the metric. For example, if you're counting the occurrences of a particular term like 'Error', the value will be '1' for each occurrence. If you're counting the bytes transferred the published value will be the value in the log event."

default = "1"

}

variable "name_for_cloudwatch_log_stream" {

description = "The name of the log stream. Must not be longer than 512 characters and must not contain :"

default = ""

}

Собственно в этом файле храняться все переменные. Спасибо кэп!

Открываем последний файл:

$ vim outputs.tf

И в него вставить нужно следующие строки:

output "cw_metric_alarm_ids" {

description = ""

value = "${aws_cloudwatch_metric_alarm.cw_metric_alarm.*.id}"

}

output "cw_event_permission_ids" {

description = ""

value = "${aws_cloudwatch_event_permission.cw_event_permission.*.id}"

}

output "cw_event_rule_ids" {

description = ""

value = "${aws_cloudwatch_event_rule.cw_event_rule.*.id}"

}

output "cw_event_rule_names" {

description = ""

value = "${aws_cloudwatch_event_rule.cw_event_rule.*.name}"

}

output "cloudwatch_dashboard_ids" {

description = ""

value = "${aws_cloudwatch_dashboard.cloudwatch_dashboard.*.id}"

}

output "cloudwatch_dashboard_names" {

description = ""

value = "${aws_cloudwatch_dashboard.cloudwatch_dashboard.*.name}"

}

output "cw_log_group_ids" {

description = ""

value = "${aws_cloudwatch_log_group.cw_log_group.*.id}"

}

output "cw_log_group_names" {

description = ""

value = "${aws_cloudwatch_log_group.cw_log_group.*.name}"

}

output "cloudwatch_log_metric_filter_ids" {

description = ""

value = "${aws_cloudwatch_log_metric_filter.cloudwatch_log_metric_filter.*.ids}"

}

output "cloudwatch_log_metric_filter_names" {

description = ""

value = "${aws_cloudwatch_log_metric_filter.cloudwatch_log_metric_filter.*.names}"

}

output "cloudwatch_log_stream_ids" {

description = ""

value = "${aws_cloudwatch_log_stream.cloudwatch_log_stream.*.id}"

}

output "cloudwatch_log_stream_names" {

description = ""

value = "${aws_cloudwatch_log_stream.cloudwatch_log_stream.*.name}"

}

Переходим теперь в папку aws/examples и создадим еще одну папку для проверки написанного чуда:

$ mkdir cloudwatch && cd $_

Внутри созданной папки открываем файл:

$ vim main.tf

И вставим в него следующий код:

#

# MAINTAINER Vitaliy Natarov "vitaliy.natarov@yahoo.com"

#

terraform {

required_version = "> 0.9.0"

}

provider "aws" {

region = "us-east-1"

profile = "default"

}

module "cloudwatch" {

source = "../../modules/cloudwatch"

dimensions = [

{

AutoScalingGroupName = ""

#AutoScalingGroupName = "${aws_autoscaling_group.bar.name}"

}

]

alarm_name = "My first alarm"

#alarm_description = "Test description"

#alarm_actions = "${aws_autoscaling_policy.bat.arn}"

#

#principal_for_event_permission = "XXXXXXXXXXXXXXX"

#arn_for_cloudwatch_event_target = "arn:aws:sns:us-east-1:XXXXXXXXXXXXXXX:test-sns-sns-prod"

#

#name_for_cloudwatch_log_group = "test-log-group"

#

#name_for_cloudwatch_log_stream = "test-log-steam"

}

Еще полезности:

Работа с AWS IAM и Terraform в Unix/Linux

Работа с AWS VPC и Terraform в Unix/Linux

Работа с AWS S3 и Terraform в Unix/Linux

Работа с AWS EC2 и Terraform в Unix/Linux

Работа с AWS ASG(auto scaling group) и Terraform в Unix/Linux

Работа с AWS ELB и Terraform в Unix/Linux

Работа с AWS Route53 и Terraform в Unix/Linux

Работа с AWS RDS и Terraform в Unix/Linux

Работа с AWS SNS и Terraform в Unix/Linux

Работа с AWS SQS и Terraform в Unix/Linux

Работа с AWS KMS и Terraform в Unix/Linux

Работа с AWS NLB и Terraform в Unix/Linux

Все уже написано и готово к использованию. Ну что, начнем тестирование. В папке с вашим плейбуком, выполняем:

$ terraform init

Этим действием я инициализирую проект. Затем, подтягиваю модуль:

$ terraform get

PS: Для обновление изменений в самом модуле, можно выполнять:

$ terraform get -update

Проверим валидацию:

$ terraform validate

Запускем прогон:

$ terraform plan

Мне вывело что все у меня хорошо и можно запускать деплой:

$ terraform apply

Как видно с вывода, — все прошло гладко! Чтобы удалить созданное творение, можно выполнить:

$ terraform destroy

Весь материал аплоаджу в github аккаунт для удобства использования:

$ git clone https://github.com/SebastianUA/terraform.git

Вот и все на этом. Данная статья «Работа с AWS CloudWatch и Terraform в Unix/Linux» завершена.